Overview

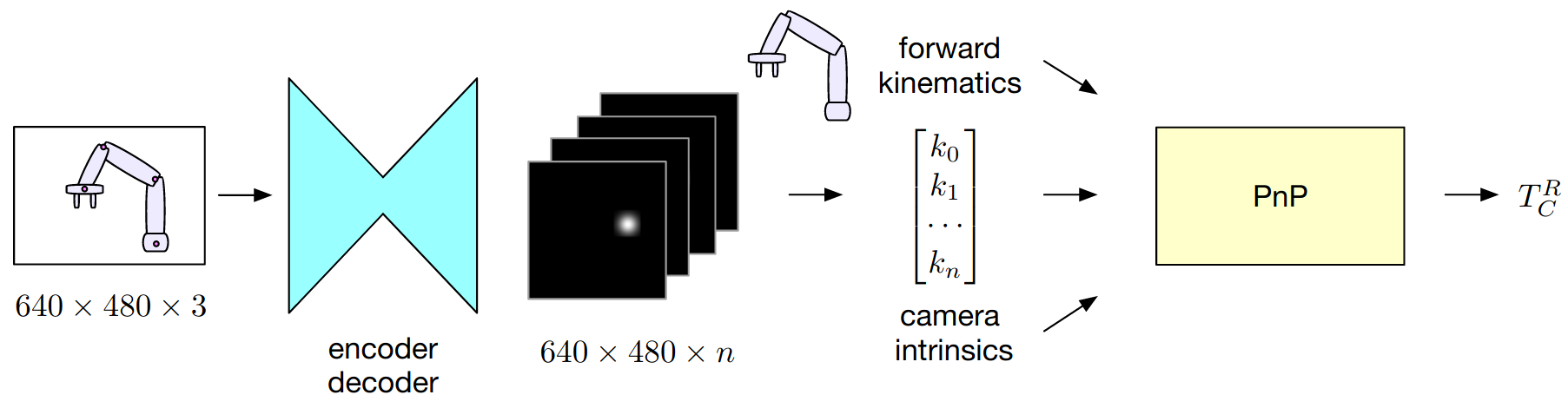

My collaborators and I developed an approach for camera calibration from a single RGB image of a robot manipulator. Named DREAM (Deep Robot-to-camera Extrinsics for Articulated Manipulators), this framework uses a robot-specific deep neural network to detect keypoints (typically at joint locations) in the RGB image of a robot manipulator. Using keypoint locations along with the robot forward kinematics, the camera pose with respect to the robot (the desired transform needed for calibration) is estimated from the Perspective-n-Point (PnP) algorithm. In this manner, DREAM leverages both classical and cutting-edge vision approaches through marrying (deep-)learning-based detection with a principled, geometric algorithm. We conducted extensive experimental evaluation of our method using the Franka Panda manipulator with different cameras. Our approach compares favorably to a tried-and-true calibration method – hand-eye calibration – while requiring fewer images, thereby taking a signficant step towards efficient calibration, including in online settings – beyond the reach of offline methods such as hand-eye calibration.

This was my internship project at NVIDIA Seattle Robotics Lab during Summer 2019.