CMU DNS: Cleaning out the Augean Stables

G. Somlo, May 2010

DNS (Domain Name Service) is one of the most highly visible services supported by the Network Group. Any outage is almost guaranteed to be immediately noticed by end users, and, as such, it is of utmost importance to ensure the high availability of the service through careful design for redundancy, failover, and load sharing.

The purpose of this writeup is primarily to document the new, recently deployed system. However, to better illustrate our progress, and justify some of the technical decisions that were made, I will compare and contrast key differences between the current state of affairs and the original point from where I started.

In a nutshell, we started out with a system consisting of 18 servers interacting in ways both complex and unpredictable, with configurations automatically generated by a poorly understood NetReg subsystem (

dns-config.pl) which both lacked documentation and was perfectly willing to generate broken, unloadable DNS configurations without so much as a warning. We now have a three-tiered architecture, where all servers within a tier or class are identically configured, allowing for virtually free horizontal scaling (cost of hardware notwithstanding). With geographical redundancy built in, this system could be deployed on as few as five total servers (we currently use 10, for symmetry -- see

3.1). The

dns-config.pl configuration file generator has been

completely rewritten, and will now issue useful warnings while refusing to generate broken configurations. The NetReg mechanism by which DNS servers get populated with zone data and configuration is now well understood and documented (see

Section 4).

The remainder of this document is organized as follows:

Section 2 delves into the various underlying mechanisms for implementing IP anycast, which is the essential building block for providing both load sharing, and seamless failover in the event of hardware failure or maintenance.

Section 3 showcases the overall architecture of the new system, explains the role played by each of the three server tiers, and describes how the tiers interoperate with each other.

Section 4 documents NetReg's

DNS Server Group service (read: object) type, the primary interface by which a NetReg admin is currently expected to make DNS changes. Our current methodology for supporting DNSSec is presented in

Section 5.

Section 6 concludes with ideas for future work, such as offering service on IPv6, and improving NetReg to better support the management of restricted DNS views for special-purpose clients.

DNS has a very simple built-in failover mechanism: all speakers of the DNS protocol (clients as well as servers) typically encourage (and often require) that at least two server IP addresses be configured for every DNS "reference". To illustrate this point, let's consider DNS end clients (stub resolvers, in DNS parlance) found on most users' desktop, laptop, and handheld devices. In addition to a primary DNS server address to which all queries are sent by default, the device asks (and sometimes insists) that at least one more, secondary DNS server address be configured, to which queries are sent in the event the primary server becomes unavailable. On our campus network, the two (or more) DNS server IP addresses are automatically set via DHCP, but can still be viewed by running

ipconfig /all from a Windows shell, or by viewing the contents of

/etc/resolv.conf on Mac and Linux machines. Similarly, when DNS servers refer to one another for purposes such as forwarding or delegation, best practices encourage using multiple server IPs for the primary and backup target of the forward or delegation, respectively.

This failover method is not always sufficient in real-world, real-time scenarios. DNS record lookups (e.g., using 'host', or from within a Web browser) will merely take a few seconds longer (while the secondary server is being queried), and go mostly unnoticed. There are, however, situations when a few extra seconds of DNS response delay leads to highly visible outages. For example, the

sshd remote login service will attempt a reverse lookup on the connecting clients' IP addresses (for reasons as trivial as attempting to generate a more informative log entry). When the lookup doesn't complete within

sshd's set time limit, the login attempt is timed out, resulting in denial of service to

ssh clients. It is, of course, possible to configure

sshd to forgo reverse DNS lookup on connecting clients. However, rather than externalize this cost across all system administrators, along with addressing all other cases where real-time DNS responses are required, it is much more economical to just make DNS more reliable, as demonstrated in the remainder of this section.

Another highly visible outage scenario occurs when DNS forwarding is used. When a DNS server forwards a client query to another server, the client may give up before a second forwarder has a chance to respond. In effect, having secondary (and ternary, etc.) forwarders may be a complete waste of resources. This specific failure scenario was observed in the field only a few months ago.

IP Anycast is a technique by which one or more IP addresses are advertised using the underlying routing protocol from more than one physical location. As applicable to DNS, we configure a well-known DNS IP address (such as, e.g.,

128.2.1.10) on multiple servers, and then announce host routes to this address pointed at each server.

The primary application for anycast is load sharing: each server's advertised anycast IP route "attracts" the "closest" clients' queries, where "distance" is determined by the routing protocol. As will become apparent in

Section 3, this also means that server placement within the network is very important to ensure an even load distribution.

Failover can be accomplished if the individual routes comprising an anycast virtual server IP are announced conditionally, based on each real server's availability, and can be quickly withdrawn in the event of a failure. Once a real server's anycast route to the virtual IP is pulled, the underlying routing protocol will redistribute client queries across the remaining real servers.

This method was in use on the recently phased-out campus DNS infrastructure, before the upgrade. Each DNS server was expected to participate in the campus backbone routing protocol (OSPF), and announce the virtual DNS service IP(s) it had configured as extra loopbacks using

Quagga (an open source routing software package).

This method suffered from severely limited failover capabilities. In the event of the entire machine losing power, network connectivity, or crashing, its announced anycast route went away along with its availability for DNS service, which in turn allowed clients to be re-routed to other available servers. However, in the event of a failure limited to the DNS software (

bind/

named), the Quagga routing daemon would continue fulfilling its intended purpose (to announce routes for locally configured loopback IPs, in this case), causing a denial of service to those clients which had the misfortune of having their DNS queries routed to this server. A partial outage of this type would last until, through manual intervention, either the DNS software was restored to operational status on the server, or Quagga was shut down to stop announcing the DNS anycast IP and thus stop attracting clients whose queries would then be dropped.

One potential way to improve this situation would (have) be(en) to add support for probes and conditional route announcements to Quagga (similar to SLA, see

2.5) and

submit the patch upstream. Another, less robust, but still workable solution would have been to write a small script to shut off Quagga in the event that

bind/

named was unresponsive (e.g. a simple

cron job).

Instead, the adopted solution was one entirely worthy of

Rube Goldberg. An extra machine-specific loopback IP was set up on each server, and added to the list of IPs monitored for DNS service availability, in the idea that:

- if

bind/named died, we'd get paged for DNS on:

- The server's real (management) IP address

- The server's dedicated loopback IP address

- Intermittently, the DNS service IP (since the monitoring system was equally "far" away from all servers in terms of OSPF distance, and thus could hit either one with an equal probability when querying the anycast service IP)

- but all the above IPs would still be reachable via ping

- if quagga died, we'd only get paged on the server-specific loopback

- if the entire machine became unreachable, we'd get paged for everything.

Additionally, if clients were configured with two anycast service IPs (primary and secondary, see

2.1), these two IPs had to be supported on entirely separate and non-overlapping pools of hardware. In the event that

bind/

named failed on a server announcing both primary and secondary anycast IPs via a still-operational Quagga daemon, clients failing over from primary to secondary would have their queries routed back to the same failed server, guaranteeing a full DNS blackout instead of simply degraded service.

In conclusion, once the appropriate number of servers to support the primary DNS anycast IP was determined, it had to be doubled in order to support a secondary IP for the same client population, requiring a ridiculously high number of DNS servers which spent the majority of their time idling.

SLB (Server Load Balancer) is a feature included with the Cisco 6500 hardware platform on which our campus routing infrastructure is built. While SLB is mainly intended to offer rich support for various load balancing policies, we use it for its ability to probe service availability on real servers and to conditionally announce (anycast) routes for virtual service IPs based on the probes' result. Our new, upgraded DNS infrastructure currently uses SLB to implement IP anycast, offering both load sharing (one real server for each tier -- see

Section 4 -- advertised from each distribution router) and fast failover (announcements are withdrawn within 10 seconds if probing detects a failed server).

With SLB, individual servers no longer have to run anything beyond

bind/

named, greatly simplifying administration and monitoring. However, the most important gain obtained by moving anycast support off the servers and back into the network infrastructure was that the same hardware pool of identically configured machines can now support both primary and secondary anycast IPs. Since SLB immediately removes all anycast routes pointing at a failed server, we have eliminated all scenarios in which client traffic would have been sent to failed servers.

For an example of how SLB is configured to manage and monitor DNS servers, consider the following fragment of IOS configuration deployed on each distribution router (see

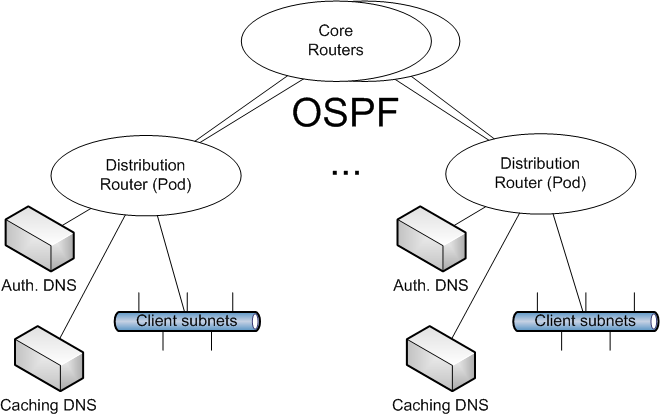

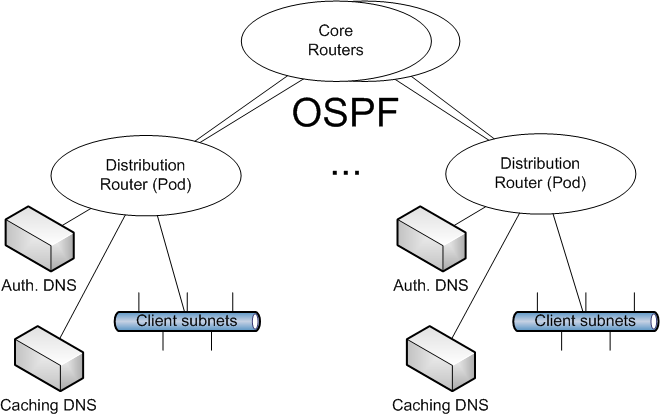

Figure 1 for the relevant network topology elements of the campus backbone, post-DNS-overhaul):

ip slb probe CMUDNS dns

lookup cmu.edu

!

ip slb serverfarm CMUDNS

predictor leastconns

probe CMUDNS

!

real IP.OF.REAL.DNS.SERVER

no faildetect inband

inservice

!

ip slb serverfarm CMUDNS-BAK

predictor leastconns

!

real IP.OF.CORE.ROUTER

no faildetect inband

inservice

!

ip slb vserver CMUDNS-UDP-10

virtual 128.2.1.10 udp dns

serverfarm CMUDNS backup CMUDNS-BAK

advertise active

idle 4

inservice

SLB "vservers" must be configured per-IP, per-protocol, but vserver snippets for TCP and a secondary service IP, 128.2.1.11 were omitted for brevity.

Each distribution router will forward DNS queries to the local real server, if it is available. In the event of a failure, queries are routed to the core, which then evenly spreads them out across the remaining distribution routers with operational DNS servers.

SLA (Service Level Agreements) is another technology available on the Cisco 6500 platform, beginning with IOS version 12.2.(33)SXI. It directly allows the insertion of conditional route statements into the running configuration, and is therefore much simpler and lightweight than SLB. Also, SLA has a much better chance of becoming supported under IPv6 in future Cisco IOS releases. We plan to switch our DNS IP anycast implementation to SLA as soon as all distribution routers are upgraded to SXI.

The equivalent configuration snippet for implementing anycast using SLA is:

ip sla 100

dns cmu.edu name-server IP.OF.REAL.DNS.SERVER

frequency 10

exit

!

ip sla schedule 100 life forever start-time now

!

track 100 ip sla 100

exit

!

ip route 128.2.1.10 255.255.255.255 IP.OF.REAL.DNS.SERVER track 100

ip route 128.2.1.11 255.255.255.255 IP.OF.REAL.DNS.SERVER track 100

Packets destined for one of the DNS service IPs will simply be routed to the real server, but only as long as the monitor confirms the service is indeed available. Otherwise, the local route(s) are withdrawn, and client traffic is free to follow OSPF to whichever other distribution router still announces routes to the service.

NOTE: In the above example, the real server is sent a query for

cmu.edu, and an A record is specifically expected in return. There is currently no way to specify a different record type, so we must be sure to query for a known A record to determine DNS service availability on the probed host.

Figure 1. Network Topology vs. DNS Server Placement

Prior to the upgrade, we had a relatively large number of DNS server classes, each responsible for a separate subset of our (sub)domains, which made the overall system unnecessarily difficult to comprehend. To illustrate, we had:

Figure 1. Network Topology vs. DNS Server Placement

Prior to the upgrade, we had a relatively large number of DNS server classes, each responsible for a separate subset of our (sub)domains, which made the overall system unnecessarily difficult to comprehend. To illustrate, we had:

- Top-level servers: Used to host domains such as

cmu.edu, cmu.net, carnegiemellon.org, cmu.local, and the corresponding reverses such as 2.128.in-addr.arpa. These servers also hosted subdomains not enabled for dynamic DNS updates (DDNS): ad.cmu.local, andrew.cmu.local, etc.

- Dotcom servers: Similar to the toplevel class above, these servers hosted various

.com, .org, etc. "vanity" domains for various campus projects and groups. Hosting these domains separately originated from an overly paranoid fear w.r.t. the possibility of future policies against serving domains that could potentially be interpreted as "commercial" by Internet2 and Educause.

- DDNS servers: Subdomains such as

andrew.cmu.edu, net.cmu.edu etc. which support DDNS updates (from other services such as dhcp, loadbalancers, and even NetReg itself). Separating domains with support for DDNS from those without allegedly originated due to a bug in earlier versions of named/bind, which was reportedly causing problems when dynamically updating certain types of records.

- caching servers, which supported the anycast addresses advertised to the campus client population.

Within each of the first three main categories (except caching, of course), one server was designated as master, while the rest acted as slaves. Sometimes the master itself was used to serve queries, and sometimes it was used in "shadow" mode, where it was only allowed to serve zone transfer requests from its slaves, which in turn supported the publicly visible service.

After the DNS infrastructure overhaul, only two server classes remain: caching and authoritative. Each class is accessed via two anycast service IPs (primary and secondary), which are both supported on every class member. For reasons of symmetry (so we wouldn't have to designate two distribution routers as "special" by having them host DNS servers) we have one cache and one authoritative server connected to each distribution router. This way, not only are all DNS servers equal (within their designated class), but all distribution routers are also equal (by supporting the same anycast statements to advertise the anycast DNS service IPs). Last, but not least, each distribution routers' clients are exactly one Layer-3 routing hop away from their default DNS server. A simplified network diagram illustrating DNS server placement is shown in Figure 1.

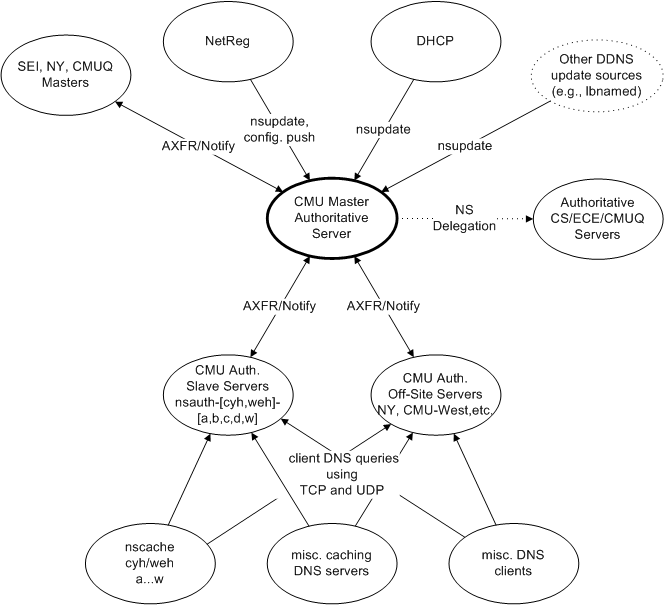

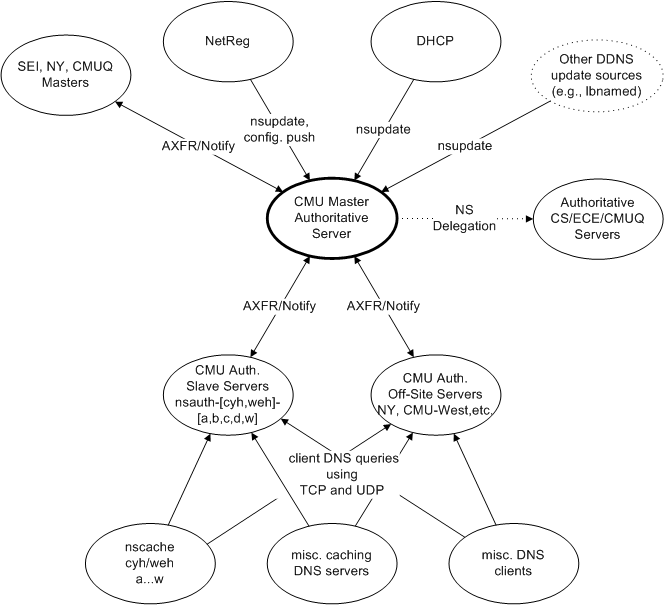

Figure 2. Overview of the CMU campus DNS architecture

The only special case server is the "shadow" authoritative master, which is the single point of update for all external systems (such as NetReg, dhcp, lbnamed, etc) . All anycast-enabled authoritative servers are configured as slaves to this machine. Redundancy and failover for the shadow master is accomplished through VMotion

Figure 2. Overview of the CMU campus DNS architecture

The only special case server is the "shadow" authoritative master, which is the single point of update for all external systems (such as NetReg, dhcp, lbnamed, etc) . All anycast-enabled authoritative servers are configured as slaves to this machine. Redundancy and failover for the shadow master is accomplished through VMotion, as the shadow master is deployed as a guest on our VM infrastructure. Since queries for authoritative DNS data are only ever sent to the authoritative anycast service IPs, a temporary software failure on the shadow master will only impede the ability to enact further DNS updates, but never prevent querying existing data. An illustration of how the various servers interact with each other is given in

Figure 2.

We can now count the minimum number of servers required to support this architecture with physical redundancy:

- one shadow master,

- two authoritative slaves (each supporting two authoritative anycast service IPs), and

- two caching servers (both supporting the two anycast IPs advertised to campus clients),

for a total of five machines. As explained earlier, we run five caches and four authoritative slaves (without much extra effort, since all caches and all authorities are configured identically to each other), for a total of 10 servers.

Another issue related to how our servers interact is configuring caches to bypass the DNS root. This is a requirement when resolving

*.local names or

RFC1918 reverse lookups such as

*.172.in-addr.arpa. Since these domains are expected to be private to each site that supports them, the DNS root servers must necessarily fail to resolve any related queries. Therefore, caches that begin resolving such queries at the DNS root will be guaranteed to fail, put undue load on the root servers (poor

netiquette), and advertise the lack of skill of their administrators to the Internet community.

Caches must instead be configured to begin recursion for any

*.local or reverse

RFC1918 queries directly at our own authoritative servers. It's a handy extra improvement in response time to also bypass the DNS root for

*.cmu.edu,

*.2.128.in-addr.arpa, and any other top-layer domain known to be hosted on our authoritative servers.

Two methods exist to configure a cache to bypass the DNS root: zones can be configured as either "forward" or "stub". The former was used extensively with our previous DNS infrastructure, but has now been abandoned in favor of the latter, which brings about vast improvements in simplicity and flexibility.

To bypass the DNS root for all

*.cmu.local lookups using "forward", we'd have to start by adding the following to the cache's

named.conf file:

zone "cmu.local" {

type forward;

forwarders {IP.OF.AUTH.1; IP.OF.AUTH.2; ...};

};

If a query were received for

something.cmu.local, it would be forwarded to the first IP address on the forwarders list. The caching server would expect a full answer, which it would then return to its client, and also cache for future reference. The big drawback to this method is that any server listed as a forwarder is expected to either be able to answer the query authoritatively, or be willing to recurse on the forwarding cache's behalf. Answering with a delegation is considered a forwarding failure, and the cache would proceed to query the next IP on its list of forwarders. Assuming that, on the forwarders, a subdomain of

cmu.local (e.g.

cs.cmu.local) was delegated to another set of servers, it would be necessary to either configure the forwarders to allow recursion from our cache, or to add another entry for

zone "cs.cmu.local" to the caching server's

named.conf, forwarding to the new set of authorities for that domain.

Needless to say, using this method made our old server configuration brittle and complex, by requiring that data (delegations are accomplished by inserting NS records into the parent zone) be replicated in configuration (the same servers mentioned in the NS delegation records now had to be listed as forwarders in

named.conf on the caches). It also required that our authoritative servers act as caches (but only to our caching servers), adding to the overall

Rube Goldberg-esque nature of the entire system.

The proper solution to the problem of bypassing the DNS root is to use "stub" zones instead:

zone "cmu.local" {

type stub;

masters {IP.OF.AUTH.1; IP.OF.AUTH.2; ...};

};

This statement simply forces the cache to always keep a copy of the zone's NS records up to date from the listed masters. The other piece of the puzzle is the fact that the DNS recursion algorithm (by which a cache starts at the DNS root and keeps following delegations until it finds a name server which can answer authoritatively) is, in fact, opportunistic. The recursion only starts at the DNS root if there are no better (i.e. more specific) servers already cached. In conclusion, using "stub" will not only bypass the root, but also allow the cache to follow any sub-delegations (e.g. to

cs.cmu.local) without requiring them to be spelled out in the actual config file.

Using "stub" allowed a much cleaner and simpler configuration of our servers: authorities are no longer expected to perform recursion for anyone, and caches only need explicit configuration entries for the top layer (

local,

cmu.edu, etc.) of domains we serve, while subdomains are resolved by following any applicable delegations returned by the authorities.

Campus DNS servers continue to have their configuration and zone data generated and pushed from NetReg (our homebrew IPAM system). At a high level, DNS management in NetReg occurs via two types of objects (or "service groups"):

DNS Server Group and

DNS View Definition. Two scripts,

dns-config.pl and

dns.pl generate configuration and zone files, respectively. At a lower level, the configuration elements of the NetReg service groups were lacking documentation, and the scripts were poorly written (and therefore mostly unreadable). Also, at least in the case of

dns-config.pl, they allowed the creation of broken, unloadable configuration files with absolutely no warning or feedback of any kind.

The purpose of this section is to document NetReg's DNS configuration elements, explain how they affect the resulting configuration files, and serve as a guide to administrators wishing to make DNS changes via NetReg. This is knowledge I acquired during a

rewrite of dns-config.pl.

NetReg service groups are simply sets of other NetReg objects of various types (machine, dns_zone, service, etc.), with various attributes that may be attached either to individual members, or to the set as a whole. Other, separate logic (such as the

dns-config.pl config file generator) are tasked with assigning meaning to these object sets and taking action based on their contents.

Let's begin by examining the layout of our typical

named.conf file:

options {

GLOBAL OPTIONS;

}

keys and ACLs (master server only);

view "some_view" {

VIEW OPTIONS;

zone blocks ...

};

...

view "global" {

zone blocks ...

}

Note how the "global" view does not have its own set of options. Instead, the 'GLOBAL OPTIONS' from the top of the file apply. Other views may come with their own options which override or add to the inherited global ones. Master servers may contain keys and ACLs which control the DDNS update process.

DNS options (whether global or view-specific) are added using this (improperly named, should be something like 'DNS Option Set' instead) service group type. This type of object contains no members, and is used only as a collection of attributes, which may of the following types:

Server Version: Other options and attributes contained in this group will only be applied to servers with matching version strings.

Import Unspecified Zones: Controls whether zones which have no Zone In View attributes configured on their DNS Server Group membership will be added to views generated based on this group (see 4.1.2 below). By default (if unset), the value of this attribute is assumed to be 'yes'.

DNS Parameter: Option lines which will be added to either a specific view, or to the global options of named.conf, depending on this group's Service View Name attribute in the parent DNS Server Group. No error checking is performed on the values of this attribute, so caution must be taken to avoid introducing syntax errors into the resulting config file.

This is the main DNS configuration mechanism offered by NetReg. Its purpose is to tie together DNS servers (machine type members) with zones (dns_zone type), and views (supplied by adding

DNS View Definition groups as service type members). For a

DNS Server Group to have any effect, it must contain machines, and at least one of either a set of zones, or a set of views. Any group which contains all three -- machines, zones, and views -- can be separated out into two equivalent groups, one assigning the zones to the machines, and another one adding views to the same machines. In fact, the latter method of keeping views and zones in separate

DNS Server Group objects is strongly recommended, as a way of preserving the legibility of NetReg configuration.

Machine members of a

DNS Server Group may have the following attributes set:

Server Version: Specifies the type and version of DNS software expected to run on that machine. A machine with this attribute unset may be used as a configuration element in other servers' config files, but will not have any configuration elements generated based on its membership in the current service group. Also, a machine must have this attribute set to the same value across all service groups of which it is a member, unless omitted (e.g., setting the version to 'bind9' in one service group and to 'foobar123' in another is considered an error, and dns-config.pl will complain loudly; setting to 'bind9' in one group and not setting it at all in another is OK, and only the first group will lead to data being added to the machine's named.conf). Currently, dns-config.pl will skip generating a config file for a server if the version is set to something other than 'bind9'.

Server Type: Specifies the role this server will play relative to the zones assigned to it by this service group. Allowed values are 'master', 'slave', 'stub', 'forward', and 'none', with the latter assumed to be the default when the attribute is omitted.

A server's membership in any

DNS Server Group will only lead to entries being added to its

named.conf if both a valid version and a valid type (other than 'none') are set. A machine with a valid type and no version may end up being referred to in the

named.conf of other group members, but will not receive any entries in its own config file based on its membership in this service group.

Service (i.e., view) members of a

DNS Server Group may have the following attributes:

Service View Name: Specifies name of the view which will be created on the group's machine members, and to which the options contained in this view member will be added. Using '_default_' or 'global' will result in the options being added to the named.conf global options section. Using anything different will result in the creation of a view with that name on all applicable machine members, with all options added under that view.

Service View Order: Specifies a view's relative position in named.conf as compared to all other views. Has no effect on 'global', which always comes last. Defaults to '0' (earliest order) if not set.

Zone members of a

DNS Server Group may have the following attributes:

Zone In View: If no attributes of this type are configured on a zone, it is considered 'unspecified' (see 4.1.1). Unspecified zones are added to any view which does not forbid it by setting Import Unspecified Zones to 'no'. If one or more Zone In View attributes are set, the zone will only be added to the views thus specified.

Zone Parameter: Specifies an arbitrary named.conf line to be added inside a zone's configuration block. Multiple such attributes may be added to a zone. No error checking is performed on the values of this attribute, so caution must be taken to avoid introducing syntax errors into the resulting config file.

The

DNS Server Group object type may also contain the following service-wide attribute:

Forward To: Specifies which type of machine members to use as targets for forwarding or stubbing when configuring zones on 'forward' or 'stub' type servers, respectively. The allowed values are 'master', 'slave', or 'both'. For example, if machine members of all types are present in a service group, and Forward To is set to 'both', then any zones added to a 'forward' type server will have 'forwarders' set to both master and slaves. In the absence of a set value for this parameter, 'master' is assumed by default.

The

dns-config.pl script will first construct the set of servers which need configuration files, by iterating across the full list of available

DNS Server Group objects and retaining all machine members with a valid version and type attribute.

For each host,

dns-config.pl will again iterate across all

DNS Server Group objects and collect all views applicable to the host.

Finally, for each view on the current host,

dns-config.pl will iterate across all

DNS Server Group objects once more, collecting all zones applicable the current view. The whole process is shown in the following snippet of Perl-ish pseudocode:

foreach $DnsSrvGrp {

foreach machine member $HN of $DnsSrvGrp {

push ($DnsSrvGrp, @{$HGroups{$HN}});

}

}

foreach $HN (keys %HGroups) {

foreach $DnsSrvGrp (@{$HGroups{$HN}}) {

verify version consistency for $HN;

collect views in $ViewOrder{$VName};

}

foreach $VName (sort keys %ViewOrder) {

foreach $DnsSrvGrp (@{$HGroups{$HN}}) {

collect zones from $DnsSrvGrp and process them for inclusion under $HN->$VName;

}

}

}

When adding a zone to a 'master' server's config file,

dns-config.pl will check whether the zone is configured for DDNS updates, and, if so, also add the necessary authorization bits to the configuration. This information is primarily originated within the

DDNS Authorization string attached to each zone, which contains DDNS key information. In addition, host-based authorization for DDNS updates can be configured via service groups of type

DDNS_Zone_Auth. This service group type simply bundles zone and machine members, without any attributes. The semantics of a

DDNS_Zone_Auth group is that any machine member will be allowed to DDNS-update any zone member, and

dns-config.pl ensures this information is added to the relevant

named.conf files in the form of

ACL statements.

The following is a list of issues to be aware of, in order to prevent

dns-config.pl from generating broken

named.conf files:

- A zone's dynamic update keys from NetReg will be added to the first valid (version, type) master server encountered; this master server will also be added to

dhcpd.conf on the dhcp servers, which will then send it dynamic updates when handing out leases.

- NetReg will allow multiple servers to be configured as masters, but only one of them will receive the necessary DDNS key material and be added to

dhcpd.conf. Which of the multiple servers "wins" is undetermined, so dns-config.pl will send out a warning when this situation is detected.

- NetReg will allow a zone to be associated with a server from more than one

DNS Server Group. To avoid dns-config.pl throwing an error, only one such association should lead to named.conf material being generated for the server. In other words, all but one of these groups must have the server listed as versionless, to be referenced in other machines' named.conf as a forwarding or stubbing target.

We are currently managing and monitoring the Pittsburgh campus DNS infrastructure, as well as the two off-site servers located on our New York satellite campus. Several other servers under the CMU umbrella are independently managed (Qatar, West, etc.) and, while we slave a few zones from them, we are not concerned with their configuration.

This section explains how the Pittsburgh campus servers are configured, both with global options and in terms of which zones they end up supporting.

Several

DNS View Definition objects exist in NetReg with options for various (sub)types of DNS servers:

view.globalopts.common: common across all campus servers

view.globalopts.cache: specific to the caching servers

view.globalopts.auth: common across all authoritative servers

view.globalopts.authslave: specific to the slave auth. servers only

view.globalopts.authmaster: specific to the master server only

For example, the location of the statistics file must be configured on all campus servers, and therefore must go under

view.globalopts.common. Only caching servers allow recursion, so

allow-recursion goes under

view.globalopts.cache. Only the master needs to send notification messages (to its slaves) when zones get updated, so

also-notify goes under

view.globalopts.authmaster. All authoritative servers maintain journal files (for either dynamic updates or incremental zone transfers), so the '

max-journal-size 1024k' setting (which limits the unbounded growth of a journal and thus prevents performance issues when many full zone transfers need to occur simultaneously) goes under

view.globalopts.auth.

New options should be added to exactly one of the above groups. In order to keep NetReg configuration relatively easy to follow, I strongly recommend that

DNS Server Group objects used to connect machines with their global options be separate from those used to connect machines with the zones they need to serve. The following

DNS Server Group objects are currently in use for setting global options:

master.globalopts.dns

- machine:

nsauth-master.net.cmu.edu

- options:

view.globalopts.[common,auth,authmaster]

slave.globalopts.dns

- machines:

nsauth-[cyh,weh]-[a-w].net.cmu.edu

- options:

view.globalopts.[common,auth,authslave]

caching.globalopts.dns

- machines:

nscache-[cyh,weh]-[a-w].net.cmu.edu

- options:

view.globalopts.[common,cache]

- extra views/options:

view.proxy.[quickreg,authbridge].bind9

The two extra views (

quickreg and

authbridge) configured on the caching servers are specific to a limited number of sources (unregistered wired IP space and the AuthBridge/RAWR proxies, respectively), and serve a set of special, hand-configured zones to these clients to redirect certain types of traffic to our "portal"-style page, a.k.a. FirstConnect. The hand-configured zone files must currently be independently loaded onto each caching server (See

Section 5 for a potential future enhancement to NetReg which would allow these zones to also be pushed to the servers automatically).

There are three types of zones for which we are authoritative:

- Top Layer:

cmu.edu, cmu.net, 2.128.in-addr.arpa, cmu.local, etc., and a whole set of "vanity" domains for various campus entities such as cyrusmail.org, etc.

- Subdomains:

net.cmu.edu, andrew.cmu.edu, 6.2.128.in-addr.arpa, etc

- Slaved zones from other servers:

237.128.in-addr.arpa, west.cmu.edu, qatar.cmu.edu, etc. Slaved zones may be at the top layer (237.128.in-addr.arpa) or subdomains (the remainder of the examples just listed).

We map these zones to our servers using four

DNS Server Group objects:

toplayer.dns: all top layer zones under our full control are configured as follows:

- as master zone files on

nsauth-master.net.cmu.edu

- slaved from

nsauth-master on nsauth-[cyh,weh]-[a-w].net.cmu.edu

- stubbed from

nsauth-[cyh,weh]-[a-w] on nscache-[cyh,weh]-[a-w]

toplayer-slaved.dns: all top layer zones we do NOT control:

nsauth-master is listed as a versionless master to avoid attempts to generate conflicting zone configuration (see 4.2). The zones are slaved form their respective masters on nsauth-master via separate, dedicated DNS Server Group objects.

- further slaved from

nsauth-master on nsauth-[cyh,weh]-[a-w]

- stubbed from

nsauth-[cyh,weh]-[a-w] on nscache-[cyh,weh]-[a-w]

subdomains.dns: all subdomains under our full control:

- master zone files on

nsauth-master

- slaved from

nsauth-master on nsauth-[cyh,weh]-[a-w]

- no configuration is necessary on the caches, since stubbing top layer domains facilitates recursivey following sub-delegations.

subdomains-slaved.dns: all subdomains we do not control:

- again,

nsauth-master is listed as a versionless master, and zones are slaved from their respective masters via separate, dedicated DNS Server Group objects.

- slaved from

nsauth-master on nsauth-[cyh,weh]-[a-w]

- still no configuration is necessary on the caches, since stubbing top layer domains facilitates recursivey following sub-delegations.

When a new zone is created in NetReg, it should be assigned to precisely one of the above service groups. If the zone is under our control, that will most likely be

subdomains.dns (unless, of course, we're adding a new vanity top layer domain like

awesome-cmu-project.org, in which case the service group should be

toplayer.dns).

The New York machines (

ny-server-[03,04]) are multi-purpose servers, and the DNS service they offer is also serving multiple purposes. Both machines act as caching servers for campus clients.

ny-server-03 is master for the IP subnet we use at our NY satellite campus (so that their on-site dhcp server can perform DDNS updates locally, and have the result available in the event of a WAN outage).

ny-server-03 also acts as an off-site backup authority for our top layer zones (and is therefore listed as an additional slave in the

toplayer*.dns groups). Separate options are configured for these servers via the

ny-*.globalopts.dns groups.

Several tasks are encountered on a regular basis when administering DNS through NetReg. The list includes zone creation, adding CNAMEs to external entities, etc. More tasks will be described here as they are identified along the way.

DNS (sub)domains are improperly referred to as "zones" in NetReg parlance. When a new one must be created, we start at NetReg's

"Add a DNS Zone" form. First, we fill in the zone name. Forward zones will be named something like

foo.cmu.edu, or

myvanitydomain.org. Reverse zones look like

123.237.128.in-addr.arpa. The

Type pull-down allows three different subtypes for both forward (

fw-) and reverse (

rv) zone types:

toplevel: A standalone domain with its own dedicated zone file on the master DNS server. A more appropriate name for this could have been '*-standalone'.

permissible: A domain whose records are hosted within its parent's zone file. A better name for this category could have been '*-parent_hosted'.

delegated: A domain we delegate to a (set of) DNS server(s) outside our control. Only NS records are generated for this type of domain, and they are published into the domain's parent's zone file.

The remaining fields (under

SOA Parameters) are only applicable to

*-toplevel (a.k.a.

standalone) domains (or zones):

Email: Use '.' instead of '@', as expected by the DNS SOA record formatting convention. Typically set to 'host-master.andrew.cmu.edu', less frequently to 'advisor.andrew.cmu.edu'.

Host: Leave blank. This value is ignored by dns.pl, which overwrites it with the server marked as master in the DNS Server Group which contains the zone.

Refresh, Retry, Expire, Minimum, Default: Leave blank. NetReg will automatically fill in default values, which are only worth modifying under very specific (and unlikely) circumstances.

DDNS Authorization: If blank, NetReg will push new versions of the zone file to the master server via scp, which will be the only method of updating records within that domain. Populating this field with appropriate key information will enable both NetReg and other systems (such as DHCP, lbnamed, etc.) to dynamically update the zone. An auxiliary command-line script shipped with NetReg (ddns-auth-keygen) should be used to automatically generate content to be pasted into this field, if management of the zone via DDNS is desired.

Once created, standalone and delegated domains also need NS records, which must be added under the

DNS Resources section. Domains under our "jurisdiction" typically use

nsauth1.net.cmu.edu and

nsauth2.net.cmu.edu as their NS records.

Standalone domains should also be added to the appropriate

DNS Server Group, in order to have their zone file pushed to the appropriate master server, and replicated on the appropriate slave servers. Domains under our control should be members of either

toplayer.dns or (much more likely)

subdomains.dns.

Finally, standalone and parent-hosted (i.e., toplevel and permissible) domains may be associated with one or more subnets, for the purpose of allowing machines to be registered with IPs within those subnets and names within those domains.

We are frequently asked to publish CNAME records pointing at an external entity, such as:

guides.library.cmu.edu IN CNAME cmu.libguides.com

which would allow clients attempting to connect to an advertised destination of

guides.library.cmu.edu to be redirected to an externally hosted service at

cmu.libguides.com.

NetReg will only generate CNAME records when a CNAME resource is added to a registered host. For example, adding a

somecname.domain.cmu.edu CNAME resource to

somehost.net.cmu.edu will result in the following record being added under the

domain.cmu.edu zone:

somecname IN CNAME somehost.net.cmu.edu

Netreg will throw an error if the CNAME's domain (

domain.cmu.edu) does not exist as a NetReg-managed "zone", since there would not be a place to publish the CNAME record into DNS. Using the

guides.library as an example, we'll frequently see users mistakenly register something like

guides.library.cmu.edu in NetReg and then attempt to add a CNAME resource named

cmu.libguides.com to it. Not only will this fail due to

libguides.com not being a NetReg-managed zone, but even if it could succeed, it would publish a CNAME in the wrong, opposite direction of what's actually desired, in the wrong zone (

libguides.com instead of

library.cmu.edu):

cmu.libguides.com IN CNAME guides.library.cmu.edu

To correctly complete this task, we need to perform the following steps:

- Create

libguides.com as a fw-permissible zone. We don't really need NetReg to ever generate and publish a libguides.com zone file (and, for the record, com is also registered as fw-permissible), but need to be capable of registering fake hosts named *.libguides.com, which requires the domain to exist in NetReg.

- Associate the newly created

libguides.com zone with the Reserved Devices subnet.

- Register a machine named

cmu.libguides.com on the Reserved Devices subnet, using Mode = reserved.

- On the newly registered machine, under

DNS Resources, add a CNAME named guides.library.cmu.edu. This should work just fine, since library.cmu.edu is a valid zone controlled by NetReg.

The next time NetReg generates DNS data, a

guides CNAME record will be added under the

library.cmu.edu zone, pointing toward

cmu.libguides.com, which is the desired result.

DNSSec is only supported on DDNS-enabled zones. In order to keep a loose coupling between NetReg and a DNSSec enabled master server (read: in order to avoid replicating half the DNSSec code in NetReg), most DNSSec specific operations (e.g. zone sigature and key maintenance) happen on the master server directly, without NetReg's knowledge or involvement. Since zone SOA serials must be bumped during DNSSec maintenance, and NetReg already has a model of operation under which it does not keep track of these serials (DDNS), it makes sense to make DDNS a precondition for a zone to be eligible for DNSSec. Additionally, starting with

bind version 9.7, DDNS-enabled zones benefit from automated DNSSec maintenance via the '

auto-dnssec maintain' zone-specific option in

named.conf. Slave servers also don't need to know anything about DNSSec management (besides being enabled to respond to DNSSec queries by returning RRSIG records, if available), since they'll simply be slaving whatever the master server will transfer to them.

NetReg's global master-specific option list has been updated to include

key-directory "/var/named/CMU/keys", which tells the master server where to find zone-specific DNSSec keys if/when it needs to access them. If, in addition to the expected content of a zone's '

DDNS Authorization' (see

4.4.1) we also include the string "

dnssec:ena", the

dns-config.pl script will add '

auto-dnssec maintain' to the zone's list of options, in effect telling the master server to automatically manage DNSSec signatures on that zone.

The operations described here are only intended to be performed on the master DNS server. Slaves will automatically receive transferred copies of the zone(s) managed by the master, and need not get involved in DNSSec key management.

Before a zone is first signed, we need to generate a Key Signing Key (KSK) and a Zone Signing Key (ZSK). Current DNSSec best practices recommend that a larger KSK be used as a "secure entry point" into the zone. This key will sign the smaller ZSK, which in turn will sign all other records within the zone (thus reducing the size of DNSSec-enabled replies). ZSKs can be rolled over more frequently, since, unlike KSKs, they don't require updates to the zone's parent. We have standardized on 2048-bit KSKs and 1024-bit ZSKs. To generate these keys, issue the following commands on the master server:

dnssec-keygen -r /dev/urandom -K /var/named/CMU/keys -n ZONE -3 \

-b 2048 -f KSK $zone_name

dnssec-keygen -r /dev/urandom -K /var/named/CMU/keys -n ZONE -3 \

-b 1024 $zone_name

where

$zone_name is the name of the zone in question (e.g.

cmu.edu). On RHEL, make sure that all such keys are owned by

named:named, so the

bind daemon can access them.

A DS record corresponding to the KSK must be uploaded to the zone's parent (

edu in our case). To generate this record, use the following command:

dnssec-dsfromkey -f $zone_file $zone_name > dsset-$zone_name.

where

$zone_file is the file name under which the zone is stored on the master (e.g.

CMU.EDU.zone). Upload the DS record to the parent via whatever interface is available (e.g.,

http://net.educause.edu/edudomain/ for

.edu domains).

To force

bind to (re-)sign the zone immediately, use:

rndc sign $zone_name

To roll over a key, first generate a replacement KSK and/or ZSK (using '

dnssec-keygen as illustrated above in

5.2.1). Next, calculate the largest advertised TTL within the zone (

MaxTTL). A quick and easy way to accomplish this would be:

named-checkzone -o - $zone_name $zone_file | awk '{print $2}' | sort -n

If this value is very large, the DNSKEY RRSIG expiration time may occur sooner, and we may use that lower wait time instead.

We now need to re-sign the zone with

rndc sign $zone_name

which will include both the old and new versions of the key being rolled over.

If this is the KSK being rolled over, we must now also generate a DS record for the new version of the key:

dnssec-dsfromkey -f $zone_file $zone_name > dsset-$zone_name.

upload it to the parent, and wait for its publication (use

whois to determine when publication has occurred).

At this point, we mark the old key for expiration/deletion in

$MaxTTL as calculated above:

dnssec-settime -K /var/named/CMU/keys \

-R +$MaxTTL -I +$MaxTTL -D +$MaxTTL $old_key_file

Look for

$old_key_file under

/var/named/CMU/keys. It should look something like

K$zone_name.+007+$old_key_serial_no.key. The old key should stop automatically stop being used shortly after

now + $MaxTTL, but '

rndc sign' may be used anytime after that deadline to force

bind to resign the zone without the old key.

This section serves as a running wish- (and ToDo) list for remaining DNS related work.

- IPv6 service: all our DNS servers are IPv6 enabled. We are currently updating the campus backbone software, and IPv6 networking will be made available to the servers within a month, enabling them to respond to IPv6 queries from on- and off-campus

- DNSSec: NetReg needs the ability to place DS record attributes on a child zone such as

andrew.cmu.edu in order to publish them in the parent (e.g. cmu.edu), in a way very much similar to how NS records are currently handled. This will allow us to sign zones below cmu.edu, whether they're separate files we maintain (andrew) or delegated (cs, qatar, etc.).

- Rewrite

dns.pl (zone file generator). One idea is to move zone and config generation off NetReg and onto each server (using SOAP). Short of that, we should at least clean up the bitrot, since the code has not been touched in years. I am starting a list of things I found dns.pl here, to keep track of the issues:

- under some circumstances, dns.pl will not send nsupdates to remove stale NS records from a zone.

- Implement NetReg support for "virtual" zones, which get all their records configured within the zone (and receive no data from subnet associations). These could then be used to replace the hand-written zone files used with quickreg and authbridge (see 4.3.1).

Figure 1. Network Topology vs. DNS Server Placement

Prior to the upgrade, we had a relatively large number of DNS server classes, each responsible for a separate subset of our (sub)domains, which made the overall system unnecessarily difficult to comprehend. To illustrate, we had:

Figure 1. Network Topology vs. DNS Server Placement

Prior to the upgrade, we had a relatively large number of DNS server classes, each responsible for a separate subset of our (sub)domains, which made the overall system unnecessarily difficult to comprehend. To illustrate, we had: Figure 2. Overview of the CMU campus DNS architecture

The only special case server is the "shadow" authoritative master, which is the single point of update for all external systems (such as NetReg, dhcp, lbnamed, etc) . All anycast-enabled authoritative servers are configured as slaves to this machine. Redundancy and failover for the shadow master is accomplished through VMotion, as the shadow master is deployed as a guest on our VM infrastructure. Since queries for authoritative DNS data are only ever sent to the authoritative anycast service IPs, a temporary software failure on the shadow master will only impede the ability to enact further DNS updates, but never prevent querying existing data. An illustration of how the various servers interact with each other is given in Figure 2.

We can now count the minimum number of servers required to support this architecture with physical redundancy:

Figure 2. Overview of the CMU campus DNS architecture

The only special case server is the "shadow" authoritative master, which is the single point of update for all external systems (such as NetReg, dhcp, lbnamed, etc) . All anycast-enabled authoritative servers are configured as slaves to this machine. Redundancy and failover for the shadow master is accomplished through VMotion, as the shadow master is deployed as a guest on our VM infrastructure. Since queries for authoritative DNS data are only ever sent to the authoritative anycast service IPs, a temporary software failure on the shadow master will only impede the ability to enact further DNS updates, but never prevent querying existing data. An illustration of how the various servers interact with each other is given in Figure 2.

We can now count the minimum number of servers required to support this architecture with physical redundancy: